SAD¶

Overview¶

Inno Lake Prep is the preprocessing for data from multiple source using columnar processor

SAD - System Architechture Design¶

Tech Stack¶

Our component for this warehouse:

-

Build transformation pipelines by

DBT, incuded SQL transformations, yml for generic and custom tests, instruction, ... -

Database backend: BigQuery (columnar database)

-

CICD: Using GitHub Actions, CloudBuild

-

Deployment with Cloud Run

-

Tools: Google Cloud CLI

-

It packages by the container and ship with

Cloud Runinvoke byCloud Scheduler.

[1] Developer Process, copy from Christophe Oudar

[2] Environments:

There are 3 envs related with git branch

-

Local development

-

Staging

-

Production.

[3] Architechture Component

[Internal]

inno-lake-prep

-

Run single command from server [Sieu: fastapi + execute server]

-

Linked with

Go-> Transfer tofastapi+ Cloud Tasks -

Cost infisicent

-

Overall: 4

-

1 Cloud Run + 1 Cloud Task

-

Dockerize Server to execute command in backend

-

Cloud Schedule ← Workflow → Trigger Cloud Run → Report

-

Removed Workflow triggered Cloud Schedule

https://robertsahlin.com/serverless-dbt-on-google-cloud-platform/

The ETL should be control in different context

For new deployment on Lake

Trigger run workflows ETL full-refresh

For a diffrent mode on the trading date

The schedulers revoke the workflows jobs related.

The lake folder represent raw data sources component

An executor for data transformation actions in the cloud analytics warehouse

Serverless with DBT

Mostly tables is defined in schema raw

The yml file declare is based on vendors, global configuration related to.

Component of each lake:

+ Prefix: `lake_*` with `*` represented for vendors code

+ Tables mapping to lake is mostly that has prefix of that vendors.

inno-lake-prep

-

Run single command from server [Sieu: fastapi + execute server]

-

Linked with

Go-> Transfer tofastapi+ Cloud Tasks -

Dockerize Server to execute command in backend

-

Cloud Schedule ← Workflow → Trigger Cloud Run → Report

-

Removed Workflow triggered Cloud Schedule

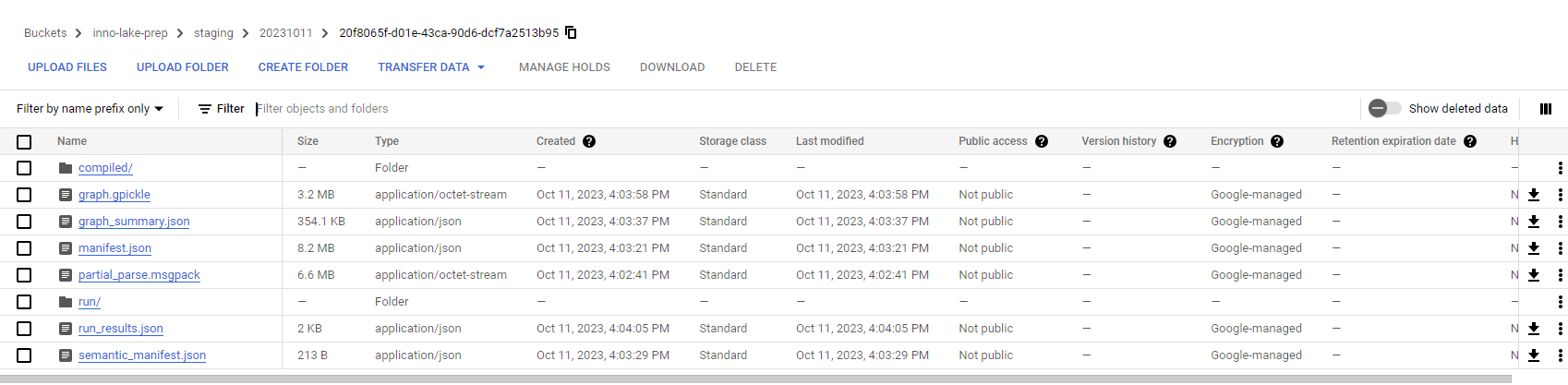

output path of gcs

Below is the design SAD for this solution.

Cloud Run

Name: morphling-target

Cloud Scheduler

Scheduler name: prod_bq2silencer_*_strategy

Note: * is the name of table transfer from Bigquery to PostgreSQL

The above scheduler jobs will transfer data from BigQuery to PostgreSQL.

Screenshot of workflows:

Screenshot of email:

Note: target is the target deployment of morphling.

The above cloud run will streaming data from Cloud SQL to Bigquery.

[^1] Deployment environment: Currently set on production only. So, for the staging, the way to invoke is mannual by Cloud Run or Workflows

[^2] Scheduler configuration: a) Timezone: ref to https://en.wikipedia.org/wiki/List_of_tz_database_time_zones

[2] Permissions required¶

For runners: sa-dbt-user@$PROJECT_ID.iam.gserviceaccount.com with roles roles/bigquery.dataEditor to write to BigQuery roles/bigquery.jobUser and run BigQuery jobs

For Cloud Build: use default one

- Acess secret manager

Cloud Run and Volumes Cloud Run does not yet support the volumes, but you can simulate this support thanks to Secret Manager integration and for a specific use case. I used it for a Spring boot deployment and it’s really helpful!

Source Reference¶

https://robertsahlin.com/serverless-dbt-on-google-cloud-platform/

Appendix¶

Appendix A: Record of Changes¶

Table: Record of changes

| Version | Date | Author | Description of Change |

|---|---|---|---|

| 0.1.0 | 06/04/2024 | Bao Truong | Initation documentation |

-

Build local dockerize application. Ref: https://cloud.google.com/run/docs/building/containers#builder ↩