Troubleshooting¶

Overview¶

Not all changes to the source schema can be detected automatically, in which case data corruption may occur. The following schema changes may cause data corruption or failure to process the events downstream: Dropping columns Adding columns to the middle of a table Changing the data type of a column Reordering columns Dropping tables (relevant if the same table is then recreated with new data added) Truncating tables

Detail¶

The destination table not transfered after schema has changed¶

Context:

Change schema -> Paused streaming, deleted in GCS and BigQuery, initialed backfill -> The targeted table is not exist

Reason:

Guessing [Not confirmed] There are not exist records in the GCS for the Dataflow to handle, so we will fake the the process by TRUNCATE (not affeced) then make a snapshot INSERT INTO (affected)

Handle:

[0] Prepairing:

- Datastream is PAUSED state

In the source database:

[1] Create a duplicated table in a temp schema or staging zone

[2] Truncate the current table, this is not affected the streaming process (in the limitation)

Then check the process and table in the destination database

If exists with the same schema, it's worked

Turn on the Datastream with RUNNING state

Clean resource after

Note:

-

Make sure the table between source and destination has the same schema

-

Please check the existed at least 1 record (if exists) to confimed

-

The dataset is not big (< 10M rows)

Following documentation:

https://www.devart.com/dbforge/mysql/studio/mysql-copy-table.html

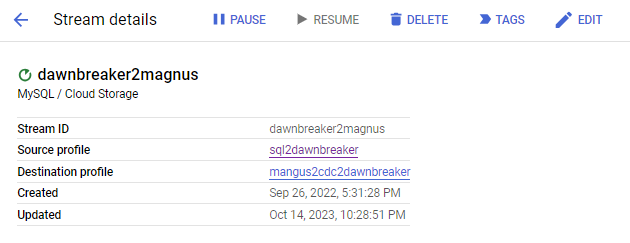

Check the streaming is RUNNING¶

Context:

Check a streaming is in running state

Handle:

You can use 2 method (a) From CLI and (b) See the state in the UI

[1] Using gcloud CLI

declare STREAMING_ID=dawnbreaker2magnus

declare PROJECT_LOCATION=<project-region>

gcloud datastream streams describe $STREAMING_ID --location=$PROJECT_LOCATION --format=json | yq '.state'

[2] Using in the UI

Go to the URL with the following construct

declare STREAMING_URI="https://console.cloud.google.com/datastream/streams/locations/$PROJECT_LOCATION/instances/$STREAMING_ID;tab=overview?project=$PROJECT_LOCATION"

Following documentation:

Gcloud SDK Datastream describe

Failed to start stream by not have permissions¶

Datastream doesnt have MySQLs SLAVE REPLICATION permission on the source database. Datastream failed to check the binary log files.

Failed writing to the destination bucket “streaming-magnus” because Datastream`s service account is missing the necessary permissions. See Destination setup for more details.

You can use the stream.update() API and add &force=true to the request to skip validation when starting the stream.

Source Reference¶

- [1] Turn on the Google Stream. Make sure the streaming is online. See at Check the streaming is RUNNING